Adobe and Nvidia both announced AI image generators today — Firefly and Picasso, respectively — that do not use artists’ work for training data without their permission. Both companies also promise to pay artists for work generated in their signature styles, as does Getty Images.

According to the Nvidia announcement, their training data comes from fully licensed data. In addition, enterprise customers and partners can also use their platform to train their own custom models based on their own proprietary data sets. The Picasso platform not only allows people to generate images with text prompts, but also 3D objects and scenes and even videos.

As part of the announcement, Nvidia said it already has partnerships in place with Adobe, Getty Images, and Shutterstock.

Shutterstock said that it would pay artists for their contributions back in October of last year, making it the first major image content company to offer fair and ethical AI image generation. Shutterstock is the leading stock image site, according to Datanyze, with 70 percent of the market. Adobe and Getty Images also offer stock images, though with far smaller market share.

Getty Images is following Shutterstock’s lead, saying it will train models on fully-licensed content, and pay royalties to content creators, according to an Nvidia blog post released today.

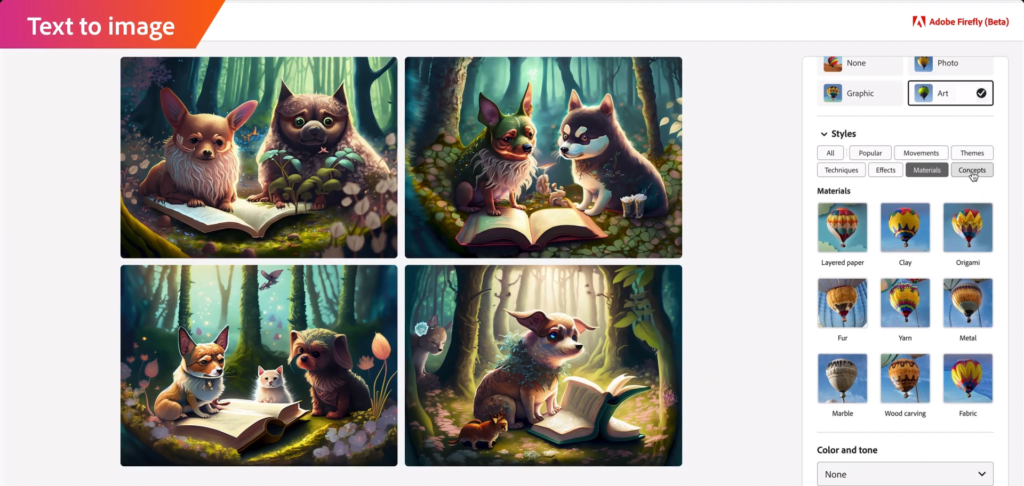

Finally, Adobe is going all-in with generative AI, planning to embed the technology across its full suite of services, including Photoshop.

I haven’t tried out their services yet — it’s currently in closed beta and I’m waiting for my access. But the company says that people can already create images based on text prompts.

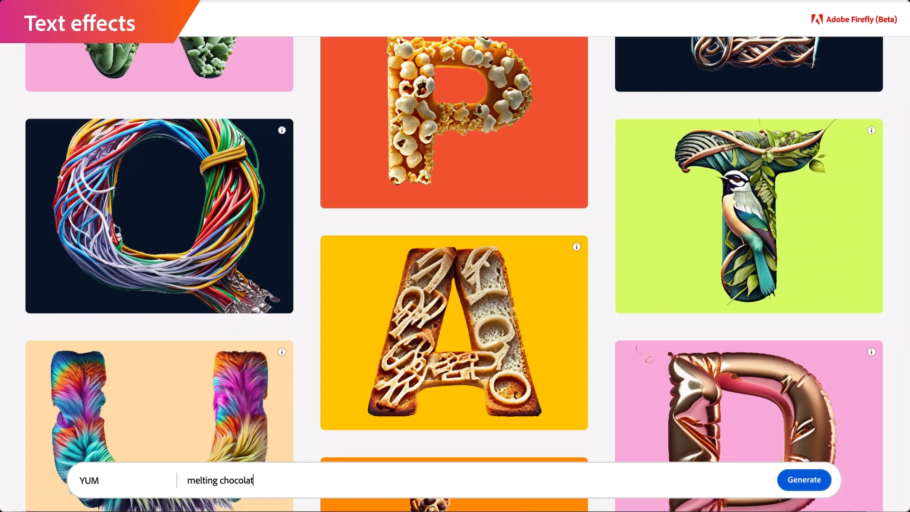

Adobe also allows you to create cool text effects.

In the future, users will also be able to use text prompts to replace parts of an image, to zoom out of an image to see more of the surrounding space, generate editable vector images from a text prompt, create seamless patterns, create custom Photoshop brushes, turn sketches into full-color images, and create personalized AI models for their own styles.

“Adobe’s intent is to build generative AI in a way that enables customers to monetize their talents, much like Adobe has done with Adobe Stock and Behance,” the company said in an announcement earlier today. “Adobe is developing a compensation model for Adobe Stock contributors and will share details once Adobe Firefly is out of beta.”

The company is also promoting a universal “Do Not Train” tag that creators can use to ensure that none of their content will go to training AI models without their permission.

And you can learn more about what Nvidia is doing with generative AI in the keynote below, which was posted earlier today.

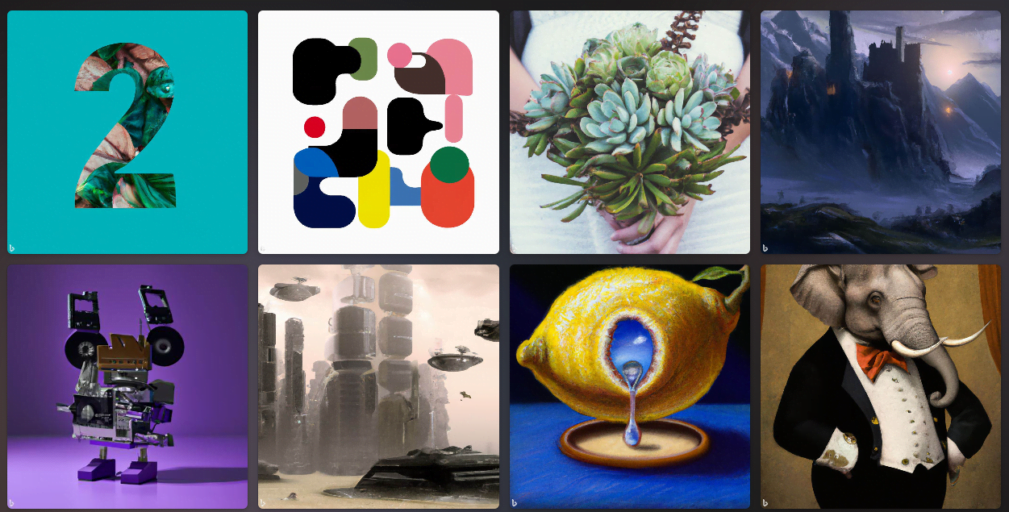

Finally, not to be left out, Microsoft announced the launch of Bing Image Creator today, a free OpenAI-powered image generator. It is powered by OpenAI’s Dall-E 2. I couldn’t find any information at all about whether Microsoft will be compensating artists for their training data. But, on the plus side, you don’t have to use Edge to use this tool.

I tried it out and the system is very, very slow — probably swamped with requests right now.

OpenAI hasn’t exactly been forthcoming lately about allowing creators — either writers or artists — to opt out of their training data sets. Or, better yet, only use content for which creators have explicitly given permission.

Meanwhile, writers using the free Stable Diffusion-based AI image generators — such as the ones I list in the my 5 free Midjourney alternatives article — will soon have models trained without the artworks of artists who do not wish their works to be used for training/

That’s because Stability AI, the company behind Stable Diffusion, announced last December that it will honor artists’ requests not to be included in the training models. It’s a good first step but, better yet would be to have explicit opt-in permissions instead.

Artists can visit the website Have I Been Trained to see if their work has been used in AI models, and to opt out of any future use.

This is expected to be in place in Stable Diffusion version 3. The cutoff date for that model was March 3, and according to news reports, 80 million images have been removed from the training data as a result.

The official release date for Stable Diffusion 3 hasn’t been announced yet, but it shouldn’t be too long, if they’re already collecting the training data.

What should you do until then if you’re using AI image generators to help you create book covers, marketing materials, blog post illustrations, and other visual content?

My recommendation is to avoid using “in the style of” with the names of modern writers as your prompts until there are payment processes and opt-out measures fully in place. So, instead of using the name of a famous comic book artist, just say “in the style of a comic book.” Yes, you’ll get a more generic image, but if you’re someone who is a content creator yourself, and you want to respect others’ content rights, you can hold off for a little while until all the legal protections are in place.

MetaStellar editor and publisher Maria Korolov is a science fiction novelist, writing stories set in a future virtual world. And, during the day, she is an award-winning freelance technology journalist who covers artificial intelligence, cybersecurity and enterprise virtual reality. See her Amazon author page here and follow her on Twitter, Facebook, or LinkedIn, and check out her latest videos on the Maria Korolov YouTube channel. Email her at maria@metastellar.com. She is also the editor and publisher of Hypergrid Business, one of the top global sites covering virtual reality.