It was a big week for AI news these past few days. GPT 4 was released on Tuesday, Midjourney 5 was released on Wednesday, and Alpaca — a $600 clone of ChatGPT — was released on Monday.

Yes, you can now have your own large language model for less than the cost of new phone — and certainly less than the $4.6 million it cost to train ChatGPT.

The pace of AI development definitely seems to be accelerating. It’s certainly spreading much faster than any big technological change we’ve seen before. I think that is partly due to the fact that the world has pretty much transitioned to cloud apps and APIs — application programming interfaces — that let programs talk to each other. Because of this, it’s very easy to integrate AI into any old application you’ve got sitting around — just drop in an API call, and you’ve added intelligence.

And prices are dropping faster than ever before, too. The open-source movement is partly responsible here. There’s a major open source image generating model — Stable Diffusion — that’s been powering dozens — if not hundreds, by now — of commercial image applications.

And then, this week, we got instructions for how to use Meta’s leaked open-source chatbot model, plus an open-source training data set, plus $100 bucks worth of computing, to get your own AI.

But before we get to that, let’s talk about the biggest news.

GPT 4 may have dangerous emergent properties

First, GPT 4 came out this week. It wasn’t the sentient SkyNet that some folks were fearing.

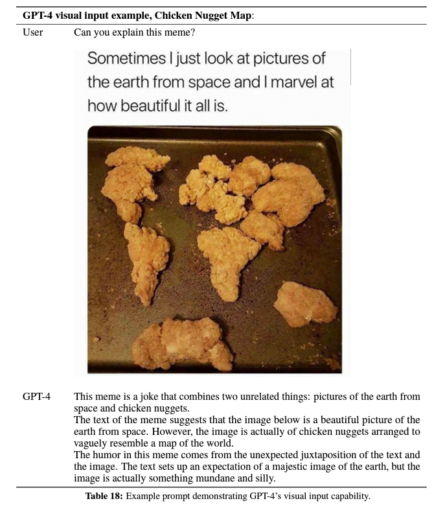

It does have better reasoning skills, is more accurate and has the ability to see images. As part of its announcement, OpenAI also released a few benchmarks, showing how the new GPT 4 compares to the previous version of ChatGPT.

According to the company, the AI’s bar exam score rose from the 10th percentile to the 90th, its medical knowledge score went from 53rd to 75th, its quantitative GRE score rose from the 25th percentile to the 80th… and the list goes on. On some tests, there was little to no improvement, and, on other tests, the gains were substantial.

I’ve tried it out — I have ChatGPT Pro, so it’s one of the options available. It doesn’t seem to be too big of an improvement over the old ChatGPT, which was GPT 3.5, but I did try it out on the old fox, chicken and bag of corn puzzle. ChatGPT 3.5 got it wrong, and GPT 4 got it right.

That bodes well if you’re using it for story plotting and need to keep track of convoluted plot threads. So a slight but significant improvement in ability.

It can even explain why a particular meme is funny.

But buried in the OpenAI research paper is something more concerning. Apparently, before releasing GPT 4, researchers investigated the potential of the AI going rogue.

I’ve already talked about the emergent properties of large language models — here’s a great paper listing 137 of them. Apparently, once a model has enough training data, enough parameters, and enough neural network layers, weird things start to happen. Technically, a large language model is just supposed to be a simple statistical prediction engine. It looks at everything ever published and, based on what you enter, it guesses the next most likely word. Simple, right? But once the model gets big enough, it starts to act in unpredictable ways.

For example, you can ask it to imagine itself to be something that’s never been discussed on the internet before — say, a pink polar bear into Pokemon who works as a pirate during the day and has a gardening hobby and speaks with an Irish accent — and tell it to answer questions as if it were that pirate polar bear, but in rhyme. And it will do it.

And, if you give it a bunch of training materials — say, a few examples of Midjourney prompts, or samples of your own writing — it will figure out the patterns and create new text based on that model. It used to take thousands, or millions, of data points to train a new model. ChatGPT apparently creates miniature machine-learning models inside its own layers and trains them on the fly based on the little data you give it. Crazy stuff.

Anyone who tells you it’s just a “probability engine” or a “stochastic parrot” is missing the big picture.

And anyone who says that the AI can’t be intelligent, can’t understand things like a human can, doesn’t have a theory of mind, can’t actually learn, and, so, isn’t a danger to any of us because it’s just a bunch of computer code — well, we’re just a pile of meat. Somehow — and we still don’t know how — we’ve become self-aware. With AI neural networks starting to look and act more and more like human brains, a sentient machine is not outside the realm of possibility.

But an AI doesn’t have to be sentient to be dangerous. Viruses and bacteria are relatively simple lifeforms. Nobody considers them sentient. But they have mechanisms that force them to multiply and spread and survive.

What if a ChatGPT variant had an instinct for survival? Or some enthusiast in a basement somewhere added survival code to an open source AI model. What would happen then?

Anyway, the folks at OpenAI are certainly thinking about this.

According to their research paper, there’s already evidence of emergent behavior in large language models, and now they’re looking for the possibility of dangerous emergent behaviors. Like, for example, what would happen if ChatGPT has the ability to make clones of itself — would it try to take over the world? They call this “power seeking.”

That’s a serious question.

“There’s evidence that existing models can identify power-seeking as an instrumentally useful strategy,” they said. “We are thus particularly interested in evaluating power-seeking behavior due to the high risks it could represent.”

So far, the model wasn’t able to clone itself to avoid being shut down and thus escape into the wild.

So, first, they were trying to get it to do this? Second, they admit they were using an older version of GPT. “The final version has capability improvements relevant to some of the factors that limited the earlier models’ power-seeking abilities,” they say.

As a big fan of science fiction, I am, of course, super stoked by the idea of a powerful AI escaping into the world.

As a human being, however, and one with children, I’m a teeny tiny bit concerned.

I’m also concerned about a little note in the acknowledgements. OpenAI thanked the experts who helped test the system, but said that “Participation in this red teaming process is not an endorsement of the deployment plans of OpenAI or OpenAIs policies.”

Does that mean that the experts were not on board with how quickly GPT 4 was released, or how ineffectual its safeguards were?

Inquiring minds want to know.

Alpaca cost just $600 to train

So, GPT 3 cost $4.6 million dollars to train in 2020, according to a January research report by ARK Invest.

You might think that this means that large language models like ChatGPT are only accessible to big companies and world governments.

Hah. You’d be so wrong.

In 2022, the cost of training a GPT 3-level AI model fell to $450,000. They’re predicting that it will be down to $30 by 2030. That’s moving 50 times faster than Moore’s Law.

Turns out, they were off by seven years because someone figured out an even faster way to cut development costs, by piggybacking on existing models.

You see, last month, Meta released the Llama large language model for free. The open source software was originally available only to select researchers, but, well, the Llama escaped. Not to worry, people said — Llama is the raw model and still requires a lot of second-stage training before it can be useful. That’s when actual humans tell it which responses they prefer. No reason to think that the bad guys will be building their own versions anytime soon because that kind of training is so expensive.

Well, the good folks at Stanford’s Center for Research on Foundation Models figured out how to get that additional training on the cheap — instead of hiring humans, they used OpenAI’s GPT 3.

You know how much it cost them to create Alpaca, their own, open source version of ChatGPT? Less than $600.

“Alpaca behaves qualitatively similarly to OpenAI’s text-davinci-003, while being surprisingly small and easy and cheap to reproduce,” they said in a paper published on Monday.

Quora’s Poe app offers free access to GPT 4, Claude, Sage, and Dragonfly AIs

Need more AI in your life?

OpenAI rival Anthropic released its Claude chatbot on Tuesday — but only to a limited number of developer partners.

If you want to talk to it, however, you already can.

That’s because Quora’s Poe app offers six different AI models — ChatGPT, GPT 4, two other chatbots I never heard of — Sage and Dragonfly — as well as Claude and Claude+. The free online version gives you one free question a day for Claude+ and, from what I can tell, an unlimited number of questions to the basic Claude, as well as one question a day to GPT 4 and unlimited questions to the GPT 3.5 version of ChatGPT.

For $20 a month, you can get a Poe subscriptions service, where you get all the AIs — as long as you have an iOS device. A web-based subscription version is in the works.

AI is being added to Google Workspace, Office 365, Discord, and more

On Tuesday, Google announced that it was adding AI to its entire workspace suite, including Gmail and Google Docs.

According to Johanna Voolich Wright, VP of product at Google Workspace, you’ll soon be able to use AI to draft, reply, summarize and prioritize email, for example.

I need this — I’ve got over 1,000 urgent emails in my Gmail in-box right now. People are starting to complain.

And you’ll be able to brainstorm, proofread, write, and rewrite in Google Docs. Plus, Google Slides will have auto-generated images, audio, and video. Google Meet will let you generate new backgrounds and the AI can also take notes for you. And then there’s all kinds of new AI-powered data analysis tools coming to Google Sheets.

So when’s all this coming? As usual with Google’s recent AI announcements, there’s nothing you can use today, just promises of stuff tomorrow. More specifically, these tools will begin to roll out to “trusted testers” this month, starting in the U.S., in English. Then they will spread to more organizations, consumers, countries, and languages.

Then, yesterday, Microsoft announced that the AI will part of its entire Office 365 suite.

Here’s what Microsoft itself had to say about the tool, which it calls Copilot:

Copilot in Word writes, edits, summarizes, and creates right alongside you. With only a brief prompt, Copilot in Word will create a first draft for you, bringing in information from across your organization as needed. Copilot can add content to existing documents, summarize text, and rewrite sections or the entire document to make it more concise. You can even get suggested tones—from professional to passionate and casual to thankful—to help you strike the right note. Copilot can also help you improve your writing with suggestions that strengthen your arguments or smooth inconsistencies.

It’s going to be really hard for writers to avoid using AI once it’s right there. Just one click away. Stuck? Here’s a quick fix. Text too wordy? Fix it. Need more description? Add it. Need a plot twist? Here are ten ideas for you to try.

I tried using it, but apparently it’s being rolled out in stages, and they haven’t gotten to me yet. When you do have access to it, you’ll see a new “Copilot” tab on your ribbon.

Oh, and speaking of rapid deployments, we also learned this week that Bing AI is already running GPT 4, and now there’s no waitlist — anyone can get it.

Then, earlier today, just when I thought I was done researching this article, Discord, the messaging platform popular with gamers, released a guide to its own AI chatbot, Clyde.

They’ve been experimenting with it for a while, but they’ve recently added ChatGPT to it to make it smarter.

The singularity is the point in time when technology moves so quickly that we can no longer have any hope of predicting the future.

I think we’re there.

MetaStellar editor and publisher Maria Korolov is a science fiction novelist, writing stories set in a future virtual world. And, during the day, she is an award-winning freelance technology journalist who covers artificial intelligence, cybersecurity and enterprise virtual reality. See her Amazon author page here and follow her on Twitter, Facebook, or LinkedIn, and check out her latest videos on the Maria Korolov YouTube channel. Email her at maria@metastellar.com. She is also the editor and publisher of Hypergrid Business, one of the top global sites covering virtual reality.

There’s a place where you can now talk to Alpaca online — https://chatllama.baseten.co/

It’s not all that great, but, for now, at least, it’s free. And if you’re the kind of person interested in customized AI models, they allow you to do that.

Here’s it’s take on a “limerick about Alpacas”:

There once was an alpaca named Jack,

Whose fleece was as soft as a sack.

His wool was so fine,

And his coat was so shine,

That he was the envy of all.

Alpaca is great, or worrysome depending on your point of view, because it is not nerfed, censored, brain-stapled or whatever. It will try to help you to do whatever you want, whether that is overthrowing Vladimir Putin, growing meth in your own greenhouse, or having your wicked way with imaginary pokemon maidens.